Digital Factories are Learning to Listen

Why are smart microphones being used in factories? Are companies planning to eavesdrop on their workforce? A clear ‘no’ is the answer to the latter question from the ‘Audio Technology for Intelligent Production’ (AiP) industry working group, founded in 2020 at the Oldenburg site of the Fraunhofer Institute for Digital Media Technology IDMT in cooperation with the Emden/Leer University of Applied Sciences. The network explores how machines interact with acoustic systems and AI. Five scientists and an engineering service provider, explains why audio technology is being featured as a future topic at EMO Hannover 2023.

The round table discussion includes Dr Jens Appell, Head - Department of Hearing, Speech and Audio Technology, Fraunhofer IDMT (Oldenburg Branch); Prof Dr-Ing Sven Carsten Lange, Professor, Production Technology, Emden/Leer University of Applied Sciences and Scientific Advisor, Fraunhofer IDMT (Oldenburg), in the field of Hearing, Speech and Neurotechnology for Production; Lorenz Arnold, Managing Director, MGA Ingenieurdienstleistungen GmbH, Process Automation and Control Technology, Würzburg; Marvin Norda, Working Group Coordinator, Fraunhofer IDMT, Oldenburg; Danilo Hollosi, Head, Acoustic Event Detection, Fraunhofer IDMT; and Christian Colmer, Head, Marketing and PR, HSA Branch, Fraunhofer IDMT.

Dr Appell, why are experts from the automotive, aviation, engineering, electronics, and other industries working together with researchers like you in the AiP industry working group?

Dr Appell: We have joined forces to exploit the wide-ranging potential of audio technology in digitalized production and assembly. Together with our industrial partners, we are developing application scenarios, discussing their design, and putting them into practice in joint projects.

Prof Lange, there is nothing new about using microphones within production—for condition monitoring, for example. So what innovative potential does audio technology hold, exactly?

Prof Lange: Acoustic process monitoring has actually been around for some time. However, we are breaking new ground in wide-ranging multimodal applications for diagnosing process characteristics and production processes in parallel and in real time with a sensor, or in characterizing machines in terms of their machining status and process capability, or even in operating them directly using voice commands. And none of this involves any significant effort or cost for integration into new and existing machines.

Arnold, can you give us your assessment as a long-standing system integrator in the industry? To what extent is this new territory?

Arnold: Acoustic technology is already being used in production for condition monitoring, but it is not yet fully established. A prime example of new territory here is speech-based human-machine interaction, which has so far only been used in experimental and niche applications in industrial production. The activities of the AiP working group are thus leading the way worldwide. I’m not aware of any competitors in this field, either nationally or internationally, at present.

Norda, you coordinate the AiP working group. What distinguishes your form of voice control from other systems such as Siri or Alexa, and what makes it a global leader?

Norda: The requirements on the factory floor are quite different from those in the living room. What is needed here is an acoustic system that requires no external servers, that runs only on company computers, and that functions reliably even under difficult production conditions, including noise interference. We develop application-specific voice control solutions for use in production which are robust and intuitive. Voice control is easy to integrate and works even without an Internet connection. It is good at recognizing voice commands even under the challenging acoustic conditions found in industrial production.

How do you convince prospective industrial customers about your form of voice control?

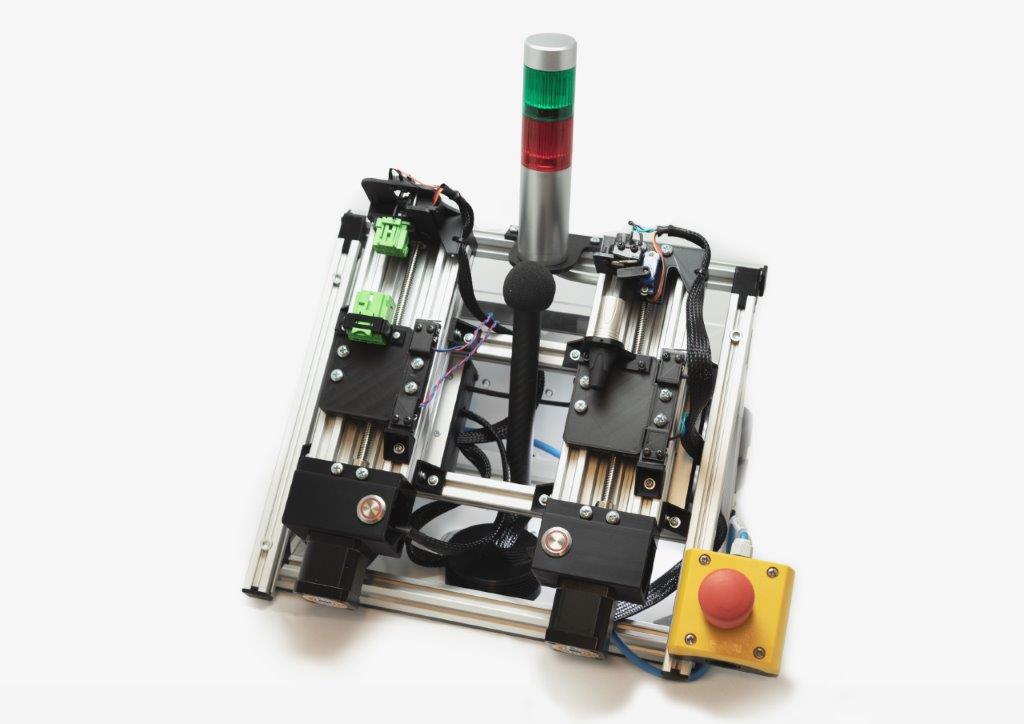

Norda: By showing how it works on a voice-controlled production cell. We have a 5-axis milling machine and a 4-axis robot, both fully equipped with voice control. This technology platform allows customers to test various microphones, headsets, and voice commands in a range of different noise environments. We’ve discovered that testing voice control in an actual machine environment is the fastest way to convince our industry partners.

Why is your voice control system particularly suitable for use in industry?

Norda: The extensive customization and integration support provided by Fraunhofer IDMT allows the speech recognizers to be individually adapted to specific speech commands and machine interfaces. This increases the effectiveness of the voice control and reduces the costs and effort required for integration.

What are the arguments in favor of voice control?

Norda: There are many. Speech is the most natural form of communication. That’s why we’re convinced that speech will also establish itself in industry as a communication interface between humans and machines, similar to smart home or automobile applications. In the industry working group, we are developing a basis for the operating interfaces of the next generation of industrial controllers. This basis will enable contactless and intuitive operation of multiple, complex machines.

But how do they cope with the different types of noise found in production?

Norda: We are currently putting our system to the test by evaluating a speech recognition study with over 160,000 spoken voice commands in different noise environments. As a general rule, it’s not the type of noise that is decisive, but the noise level in relation to the volume of the speaker at the point where the sound reaches the microphone. These kinds of research studies allow us to optimize our speech recognizer for use in industry and to make recommendations about acoustic systems and the best positions for them in the workplace.

|

Fraunhofer IDMT’s click recognition technology can be integrated into the reporting system and displayed on an interface. Image Source: Fraunhofer IDMT |

|

Fraunhofer IDMT trains not only the machine operators, but also – thanks to machine learning – the accuracy of the speech recognition. Image Source: Fraunhofer IDMT |

|

The smart sensor system can detect the clicking sound when plug connections engage. If there is no click, the acoustic monitoring system registers an error. Image Source: Fraunhofer IDMT/HannesKalter |

But control manufacturers are also experimenting with voice control, aren’t they?

Norda: The idea isn’t new, and many control manufacturers have already put forward their own solutions. However, there has been little or no widespread industrialization of voice control so far. Our aim is to optimize efficiency and robustness levels by consistently refining our speech recognizers for use in future production environments.

Which manufacturer did you work with to create the automated production line?

Norda: We have successfully integrated our algorithms into a Beckhoff industrial controller based on a Windows or Linux platform. We are also developing similar solutions for all other well-known control manufacturers.

So your speech recognition software is not running from the cloud or on a separate PC, but on the controller in the machine itself. Is it possible to operate multiple machines by voice control?

Norda: Multi-machine operation is the pinnacle of voice control because of the complexity of the machine commands, the walking distances to the machines, and the cognitive demands placed on the operator. Acoustically, however, there is no difference between operating just one or several machines simultaneously. Just like with a touchscreen, all you need is a master computer which routes the commands to the right machine.

Arnold: For me, multi-machine operation is an ideal application for proving that voice operation is not merely a technical gimmick. The result is a quantifiable increase in efficiency that can be quite considerable, depending on the application. I’ll give you an example from my current work: A customer owns 25 machine tools, with five operators handling them. I’m now trying to convince them to use voice control. One benefit would be shorter distances because an operator can control a machine via a headset even when standing some distance away from another machine. And the machine will tell him remotely if there is a malfunction.

What other types of added value do acoustics offer?

Lange: Our acoustic systems are competing with established technology. Structure-borne sound sensors integrated into the machine for the detection of chatter noise, for example, represent state-of-the-art technology. However, these sensors cannot record other, equally relevant process and machine status information. A microphone, on the other hand, installed in a suitable position in or around the machining area can simultaneously monitor the main spindle bearing, the fan, and the cooling lubricant supply, perform touch detection, and record voice commands. The user value rises exponentially when our smart sensor technology is enhanced with AI-based algorithms.

Hollosi, you and your team are developing smart sensors. What tasks are they already performing?

Hollosi: Our acoustic process monitoring is contactless and works with airborne or structure-borne sound. The smart sensor system can detect the clicking sound when plug connections engage. If there is no click, the acoustic monitoring system registers an error. The operator is informed, too, and the event is automatically documented. My team and I have developed AI-based algorithms for reliable audio analysis of all kinds of click sounds. Our solution has already proven itself in trials on the assembly of cable harnesses in the automotive industry.

The results and work of the AiP working group represent good examples of the event’s new claim: ‘Innovate Manufacturing’. The VDW is using this to attract experts from all over the world to EMO Hannover from September 18-23, 2023. Surely it would be an ideal opportunity for you to present your solutions at the ‘World’s leading trade fair for production technology’?

Colmer: Representatives from our institute and from the working group’s partner companies will definitely be there in Hanover to hold in-depth discussions on acoustics with customers and to sound out new application scenarios.

Facebook

Facebook.png) Twitter

Twitter Linkedin

Linkedin Subscribe

Subscribe