SMART SENSING

New, state-of-the-art smart sensors maximize the potential advantages that offer and have evolved to provide unprecedented levels of intelligence and communications capabilities to extend the useful life of legacy industrial equipment. Here’s knowing more about them and their types that are out to revolutionize industrial automation.

A transformative advance in the field of sensor technology has been the development of smart sensor systems. Smart sensors are basic sensing elements with embedded intelligence. The sensor signal is fed to the microprocessor, which processes the data and provides an informative output to an external user. The smart sensor is also a crucial and integral element in the Internet of Things (IoT), the increasingly prevalent environment in which almost anything imaginable can be outfitted with a unique identifier and the ability to transmit data over the internet or a similar network.

Evolution in smart sensing technology

Sensors are key to the success of modernizing industrial automation, and conventional sensor types, which convert physical variables into electrical signals, may not provide enough information in the new world of IIoT. New, state-of-the-art smart sensors maximize the potential advantages that offer and have evolved to provide unprecedented levels of intelligence and communications capabilities to extend the useful life of legacy industrial equipment.

|

Smart sensors are basic sensing elements with embedded intelligence. The sensor signal is fed to the microprocessor, which processes the data and provides an informative output to an external user. |

Types of technological improvement

Single Coil to Multi-Coil Sensor: Non-contact inductive technology detects position using a few different configurations. One sensor housing can be combined with one inductive coil for discrete or analog output. Alternatively, today one sensor housing can be combined with many inductive coils to get linear or rotary position.

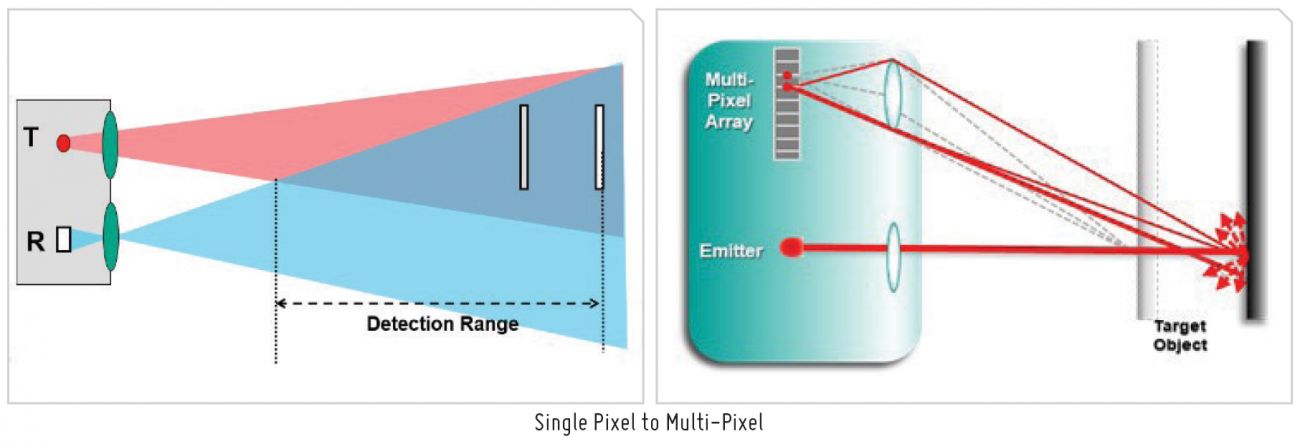

Single Pixel to Multi-Pixel: Single Pixel Sensor works on the phenomena of the amount of energy or light received back to the receiver. Traditionally, this is one of the most basic methods used in pyroelectric sensors to detect any target but as application gets complicated, there is a further requirement for precise and accurate measurement. Here comes Multi-Pixel Technology where we use Advance Triangulation to track the light spot along the multi-pixel arrays. It measures true distance to an object, regardless of color up to sub-millimeter precision.

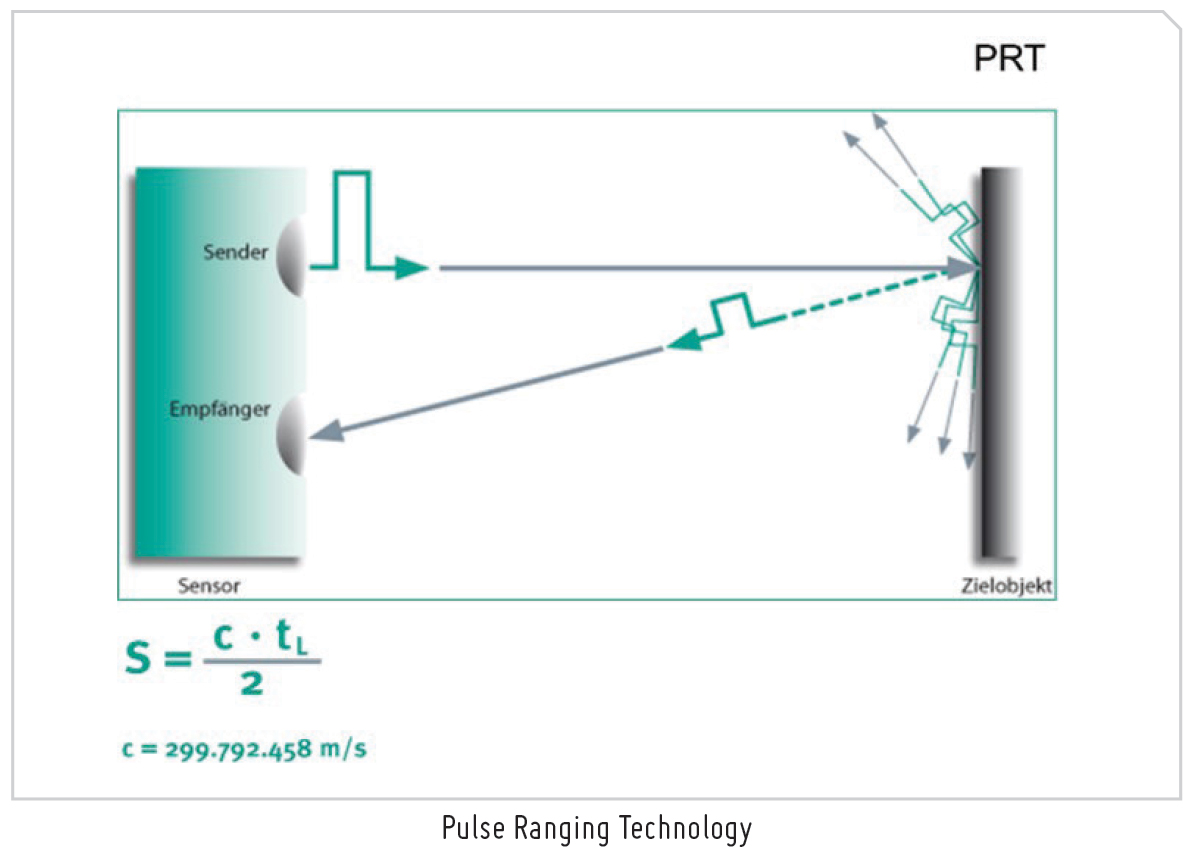

Pulse Ranging Technology: In this measurement method, a powerful light source emits short, high-energy pulses, which are reflected by the target object and then recaptured by a light-sensitive receiver. During this process, the emission and reception times are detected with a high degree of precision. From the values determined, the distance to the target object is calculated using the runtime of the light pulses. If the target object is close, the light propagation time is short. If the object is further away, the light propagation time is longer.

propagation time is longer.

The superiority of this technology lies in the power density of the light pulses, which is 1,000 times greater than in sensors with constant light sources. The benefits that this technology delivers include large measurement ranges, high detection ranges, and absolute levels of precision. Negative influences such as extraneous light or different reflection characteristics cannot impact the function of PRT sensors.

|

Since 2D cameras simply take an image of light reflected from the object, changes in illumination can have adverse effects on accuracy when taking measurements. |

Smart Positioning: In the automation world, many different types of positioning systems are available. Today, the Positioning System is reshaped from mechanical encoder-based positioning to more advanced camera-based positioning systems. Rotary Encoders use optical or magnetic sensing to monitor precise position, angle, and velocity. It includes different mechanical and electronic connection options including a wide selection of accessories. Encoders require slight efforts to fix them with a mechanical shaft or coupling with precise torque and proper alignment. However, in the digital age, we have 2-D camera-based positioning systems such as the Data Matrix positioning systems for non-contact linear and absolute positioning use Data Matrix codes to position vehicles along a track in the X-axis.

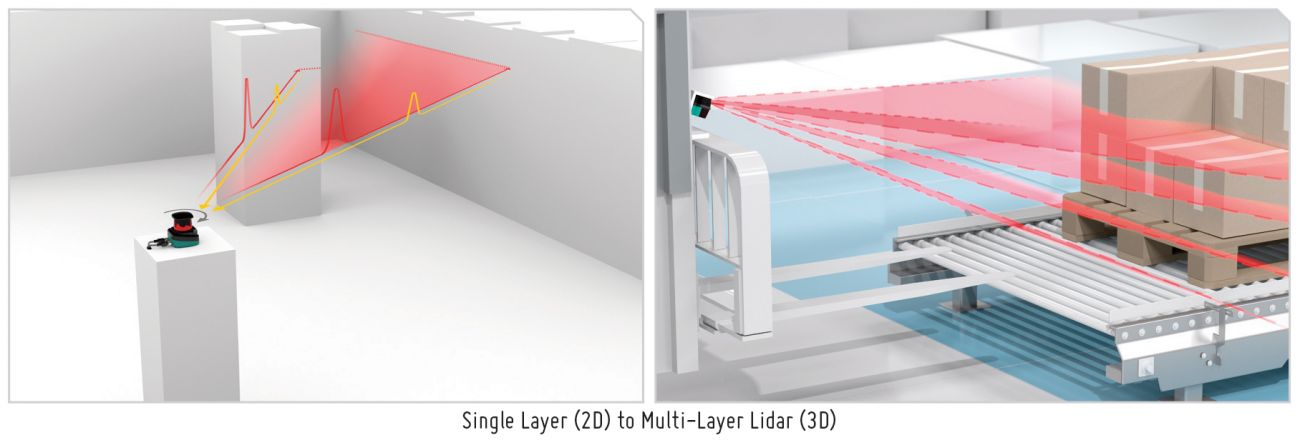

Single Layer (2D) to Multi-Layer LiDAR (3D): 2D LiDAR sensors use a single plane of lasers to capture X and Y dimensions. This could be accomplished with a continuous ring of projected light or a single spinning laser beam. Either way, ring lasers, and 2D LiDAR sensors collect the same type of X and Y dimensional data. The movement of the sensors down a pipe facilitates the collection of successive slices of 2D data that are often presented in

3D formats.

Over the past decade, there have been significant advances in 3D sensor technologies and methodologies. 3D LiDAR sensors function like their 2D counterparts, but additional measurements are taken along the Z axis to collect real 3D data. The third-axis data collection is most often accomplished with multiple lasers at varying angles or lines of vertical projection. Modern laser projection and wide-area scanning technologies allow high-accuracy, high-resolution 3D data to be collected without blind spots.

2D Vision to 3D Vision Sensors: The traditional two-dimensional machine vision system, when used in tandem with imaging library software, has been proven to be very successful in applications such as barcode reading, presence detection, and object tracking, and these technologies are only improving with time. However, since 2D cameras simply take an image of light reflected from the object, changes in illumination can have adverse effects on accuracy when taking measurements. Too much light can create an overexposed shot, leading to light bleeding or blurred edges of the object, and insufficient illumination can adversely affect the clarity of edges and features that appear on the 2-dimensional image. In applications where illumination cannot be easily controlled, and therefore, cannot be altered to fix the shot, this creates a problem within 2D machine vision systems.

3D machine vision cameras can offset this by having the capability of recording accurate depth information, thus generating a point cloud, which is a far superior object in terms of accuracy. Every pixel of the object is accounted for in space, and the user is provided with X, Y, and Z plane data as well as the corresponding rotational data for each of the axes. This makes 3D machine vision an exceptional option compared to 2D in the context of applications involving dimensioning, space management, thickness measurement, Z-axis surface detection, and quality control involving depth.

|

LAKSH MALHOTRA Product Manager Pepperl+Fuchs Factory Automation Pvt Ltd |

Facebook

Facebook.png) Twitter

Twitter Linkedin

Linkedin Subscribe

Subscribe